The Evolution of Large Language Models: From GPT to Multimodal AI

The field of artificial intelligence has witnessed unprecedented growth in recent years, with large language models (LLMs) emerging as one of the most transformative technologies of our time. These sophisticated neural networks have revolutionized how machines understand and generate human language, opening new possibilities across industries and applications.

The Foundation: Transformer Architecture

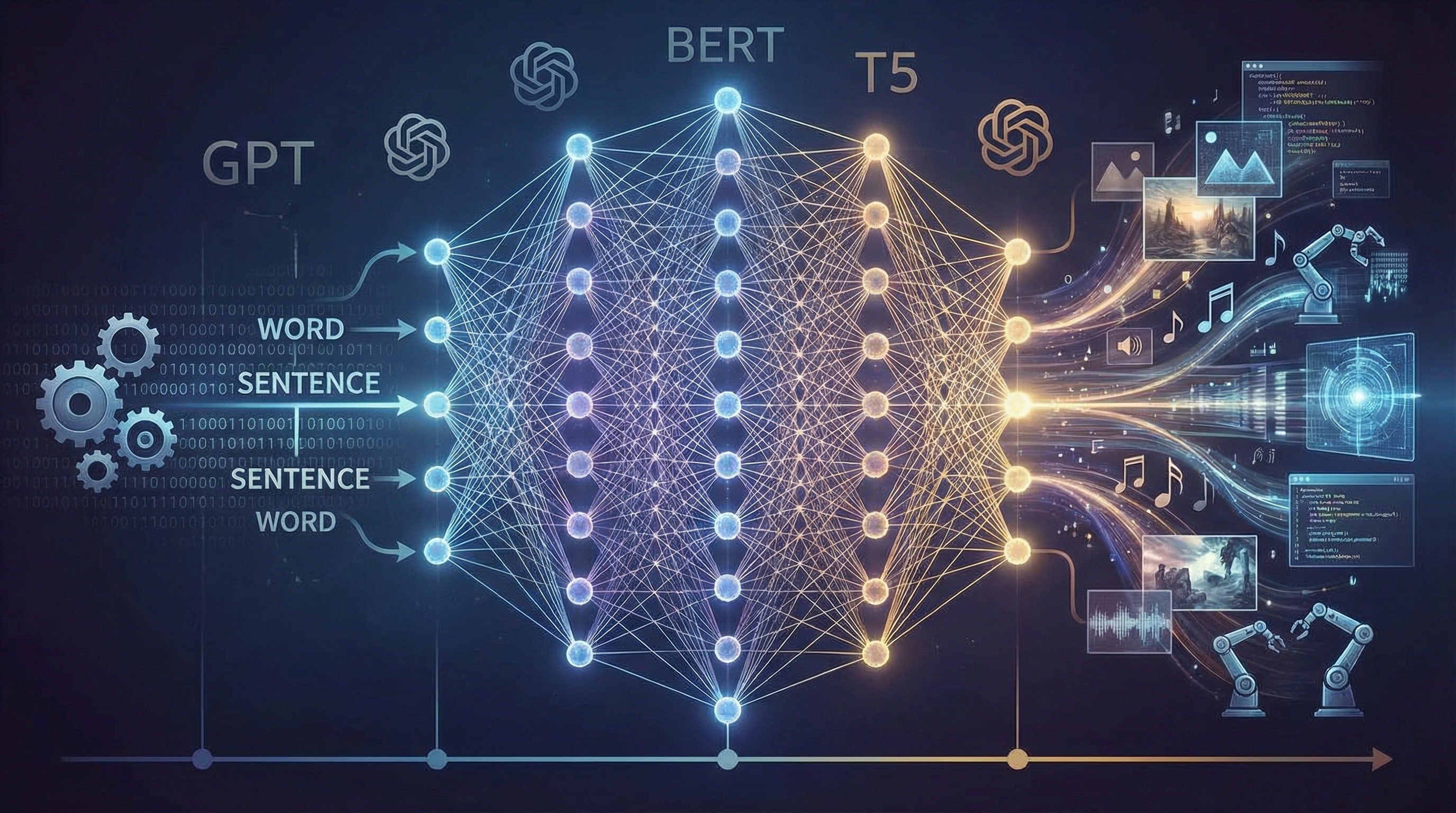

The story of modern LLMs begins with the introduction of the Transformer architecture in 2017. This groundbreaking design replaced traditional recurrent neural networks with a mechanism called “attention,” allowing models to process entire sequences of text simultaneously rather than word by word. The key innovation was the self-attention mechanism, which enables the model to weigh the importance of different words in a sentence when processing each word.

The Transformer architecture consists of two main components: encoders and decoders. Encoders process input text and create rich representations of meaning, while decoders generate output text based on these representations. This architecture proved remarkably scalable, paving the way for increasingly larger and more capable models.

The GPT Revolution

OpenAI’s Generative Pre-trained Transformer (GPT) series demonstrated the power of scaling up language models. GPT-1, released in 2018, showed that pre-training on large amounts of text data followed by fine-tuning on specific tasks could achieve impressive results. However, it was GPT-2 and GPT-3 that truly captured the world’s attention.

GPT-3, with its 175 billion parameters, exhibited emergent capabilities that surprised even its creators. The model could perform tasks it wasn’t explicitly trained for, from writing code to composing poetry, simply by being given examples in the prompt. This “few-shot learning” capability suggested that scale alone could unlock new forms of intelligence.

The release of GPT-4 marked another leap forward, introducing multimodal capabilities that allowed the model to process both text and images. This expansion beyond pure text processing represented a crucial step toward more general artificial intelligence.

Training at Scale

Training these massive models requires enormous computational resources and sophisticated techniques. The process typically involves three main stages: pre-training, supervised fine-tuning, and reinforcement learning from human feedback (RLHF).

During pre-training, models learn from vast amounts of text data scraped from the internet, books, and other sources. They learn to predict the next word in a sequence, a simple task that nonetheless requires understanding grammar, facts, reasoning, and even some common sense. This phase can take months and consume millions of dollars in computing costs.

Supervised fine-tuning involves training the model on high-quality examples of desired behavior, often created by human annotators. This helps align the model’s outputs with human expectations and reduces harmful or unhelpful responses.

RLHF takes this further by having humans rank different model outputs, then training the model to prefer responses that humans rate highly. This technique has proven crucial for creating models that are helpful, harmless, and honest.

Multimodal Intelligence

The frontier of LLM research has shifted toward multimodal models that can process and generate multiple types of data. These systems can understand images, audio, and video alongside text, enabling richer interactions and more versatile applications.

Models like GPT-4V (Vision) and Google’s Gemini can analyze photographs, interpret charts, and even understand memes. They can describe what they see, answer questions about visual content, and generate text that references specific elements in images. This capability opens up applications in accessibility, education, content moderation, and creative tools.

The next frontier involves models that can generate images, audio, and video in addition to understanding them. Systems like DALL-E, Midjourney, and Stable Diffusion have already demonstrated impressive image generation capabilities, while models like AudioLM and MusicLM are pushing boundaries in audio synthesis.

Challenges and Limitations

Despite their impressive capabilities, LLMs face several significant challenges. Hallucination remains a persistent problem, where models confidently generate false information that sounds plausible. This occurs because models are trained to produce fluent text, not necessarily accurate text.

Bias is another critical concern. Since models learn from internet data, they can absorb and amplify societal biases present in their training data. Researchers are actively working on techniques to detect and mitigate these biases, but it remains an ongoing challenge.

The computational cost of training and running these models raises environmental concerns. Training a single large model can emit as much carbon as several cars over their lifetimes. Making these systems more efficient is crucial for sustainable AI development.

The Path Forward

The future of LLMs likely involves several key developments. Efficiency improvements will make powerful models more accessible and environmentally sustainable. Techniques like model compression, quantization, and sparse architectures can dramatically reduce computational requirements without sacrificing performance.

Specialized models trained for specific domains or tasks may prove more practical than general-purpose giants. A medical AI doesn’t need to know about cooking recipes, and focusing training on relevant data can improve performance while reducing costs.

Integration with other AI systems will create more capable agents. Combining LLMs with computer vision, robotics, and planning systems could enable AI assistants that can perceive the world, reason about it, and take actions to accomplish complex goals.

Improved reasoning capabilities represent another frontier. Current models excel at pattern matching and information retrieval but struggle with multi-step reasoning and planning. Research into techniques like chain-of-thought prompting and tool use is helping models break down complex problems and solve them systematically.

Conclusion

Large language models have transformed artificial intelligence from a specialized research field into a technology that touches millions of lives daily. From writing assistance to code generation, from customer service to creative tools, LLMs are reshaping how we interact with computers and information.

The rapid pace of progress suggests we’re still in the early stages of this revolution. As models become more capable, efficient, and aligned with human values, they’ll unlock applications we can barely imagine today. The challenge for researchers, developers, and society is to guide this technology toward beneficial outcomes while managing its risks and limitations.

The evolution of LLMs from simple text predictors to sophisticated multimodal systems demonstrates the power of scale, architecture, and training techniques. As we continue to push the boundaries of what’s possible, these models will play an increasingly central role in shaping the future of technology and human-computer interaction.