Modular Blockchains and Decentralized AI: The Future of Web3

The blockchain industry is changing direction. For years, developers built monolithic chains that tried to do everything—process transactions, validate them, reach consensus, store data. All on one chain. It didn’t scale well. At the same time, AI development became the exclusive playground of a few companies with billion-dollar budgets and warehouse-sized GPU clusters. Now both fields are splitting apart in similar ways. Blockchains are going modular. AI training is going decentralized. And these two shifts are happening at exactly the right time to support each other.

The Modular Blockchain Revolution

Blockchain developers spent years assuming a chain should handle everything. Process transactions, validate them, reach consensus, ensure data availability—all in one place. Elegant in theory. Terrible in practice once you tried to scale.

Understanding Modular Architecture

Modular blockchains split operations into specialized layers. Each layer does one thing well instead of everything poorly.

Execution Layer: Processes transactions and runs smart contracts. Rollups like Arbitrum and Optimism handle thousands of transactions per second while borrowing security from their base layer.

Settlement Layer: Handles dispute resolution and bridges different execution environments. Ethereum increasingly plays this role for Layer 2 solutions.

Consensus Layer: Orders transactions and gets network participants to agree on the blockchain’s state.

Data Availability Layer: Makes sure transaction data stays accessible for verification. Celestia pioneered this specialization, building infrastructure that other chains can use without running their own.

Each layer optimizes for its specific job. Execution layers can experiment with new virtual machines. Data availability layers focus exclusively on keeping data accessible at the lowest cost. Nobody has to compromise.

The Economics of Modularity

The numbers tell the story. According to CCN’s January 2026 analysis, modular ecosystems are leading in TVL growth and developer activity. The cost advantages explain why.

In monolithic systems, every node processes every transaction, stores all state, maintains complete blockchain copies. Want more throughput? You need more powerful hardware. Fewer people can afford to run nodes. Decentralization suffers.

Modular blockchains break this pattern. A rollup processes thousands of transactions per second while posting only compressed data to its data availability layer. Validators on that layer don’t execute transactions—they just ensure data is available. Hardware requirements drop. Transaction costs plummet.

A complex DeFi transaction might cost $50 on a congested monolithic chain. The same transaction on a modular stack costs pennies. That’s not a small improvement. That’s the difference between “only whales can afford this” and “anyone can participate.”

Sovereignty and Customization

Modularity enables something beyond cost savings: sovereignty. Deploy on a monolithic chain and you accept its rules. Its programming model. Its governance. Its culture. Modular blockchains let developers customize their execution environment while still using shared security and data availability.

Gaming applications deploy chains optimized for high-frequency, low-value transactions. DeFi protocols create execution environments with custom precompiles for complex financial operations. Each makes different tradeoffs based on what matters to them.

Decentralized AI: Breaking the Monopoly

While blockchain solved its scaling problems, AI hit a centralization wall. Training frontier models became something only a handful of companies could afford. OpenAI’s GPT-4 reportedly cost over $100 million to train. Anthropic’s CEO mentioned training runs exceeding $1 billion are already happening.

This raises uncomfortable questions. Who decides what these systems can do? Who benefits? Can open-source AI survive when entry costs are measured in hundreds of millions?

The Technical Challenge

Decentralized AI training faces real obstacles. In centralized data centers, GPUs communicate at 1.8 terabytes per second using NVIDIA’s NVLink. They’re connected by InfiniBand networks that make them function as one unified compute fabric.

Decentralized training works over regular internet. Bandwidth measured in megabytes per second. Unpredictable latency. Coordinating thousands of GPUs across continents to train a single model seemed impossible.

Until recently.

Breakthrough Innovations

Several projects proved decentralized training works. The key is reducing communication overhead between nodes.

Nous Research pioneered Decoupled Momentum Optimization (DeMo), cutting communication requirements by 10x to 1,000x depending on model size. Their DisTrO framework adds compression and asynchronous training—GPUs keep working while updates propagate through the network.

In December 2024, Nous trained a 15 billion parameter model using their Psyche framework. Now they’re running Consilience, a 40 billion parameter transformer being pretrained on roughly 20 trillion tokens across their decentralized network. The training has been mostly smooth, though they’ve hit a few loss spikes that required rolling back to earlier checkpoints.

Prime Intellect took a different path with OpenDiLoCo, hitting 90-95% GPU utilization despite nodes scattered across continents. Their INTELLECT-1 model—10 billion parameters trained across three continents—showed distributed training could match centralized performance. They maintained 83% utilization across all compute, 96% between US nodes alone.

Pluralis Research introduced Protocol Learning, using model parallelism to split models across nodes so no single participant ever has complete model weights. This enables training larger models and creates an economic model where contributors own equity-like stakes in what they help train.

The Role of Reinforcement Learning

Pre-training scaling laws are showing diminishing returns. Reinforcement learning (RL) has become important for post-training improvements. RL fits decentralized environments well because it involves generating many outputs (forward passes) that can be computed independently. Only periodic synchronization is needed for weight updates.

Prime Intellect’s INTELLECT-2 demonstrated this with a 32 billion parameter reasoning model trained using decentralized RL. Their PRIME-RL framework splits the process into independent stages—generating candidate answers, training on selected ones, broadcasting updated weights. The system works across unreliable, geographically dispersed networks.

The Convergence: Why These Technologies Need Each Other

Modular blockchains and decentralized AI solve complementary problems. They create synergies neither could achieve alone.

Infrastructure Alignment

Modular blockchains create specialized layers optimized for specific functions. Decentralized AI needs exactly this kind of infrastructure.

Compute Marketplaces: AI training and inference need distributed GPU resources. Modular blockchain architectures can provide settlement and coordination layers for these marketplaces. They handle payments, reputation systems, dispute resolution. Execution layers optimized for high-throughput microtransactions process the actual compute allocations.

Data Availability: AI models need vast amounts of training data. Modular data availability layers like Celestia can ensure this data stays accessible and verifiable without requiring every node to store complete copies. This matters for decentralized training networks where participants need to verify others are training on correct datasets.

Verification and Trust: Decentralized AI faces a basic problem—how do you verify a remote GPU actually did the computation it claims? Projects like Gensyn’s Verde use deterministic operators and referee protocols to verify AI workloads. These verification systems can use modular blockchain architectures, with specialized execution layers for verification logic while settlement layers handle disputes and slashing.

Economic Synergies

Training a frontier AI model requires coordinating thousands of participants over weeks or months. Blockchain excels at this coordination problem.

Templar, operating on the Bittensor network, shows how this works. Miners contribute compute to training runs and earn TAO tokens based on their contributions’ quality. Validators verify these contributions using loss deltas and skill ratings. The system is permissionless—anyone can contribute compute without approval.

This creates a market for AI training that mirrors modular blockchain philosophy: specialized participants focusing on what they do best, coordinated through economic incentives rather than centralized control.

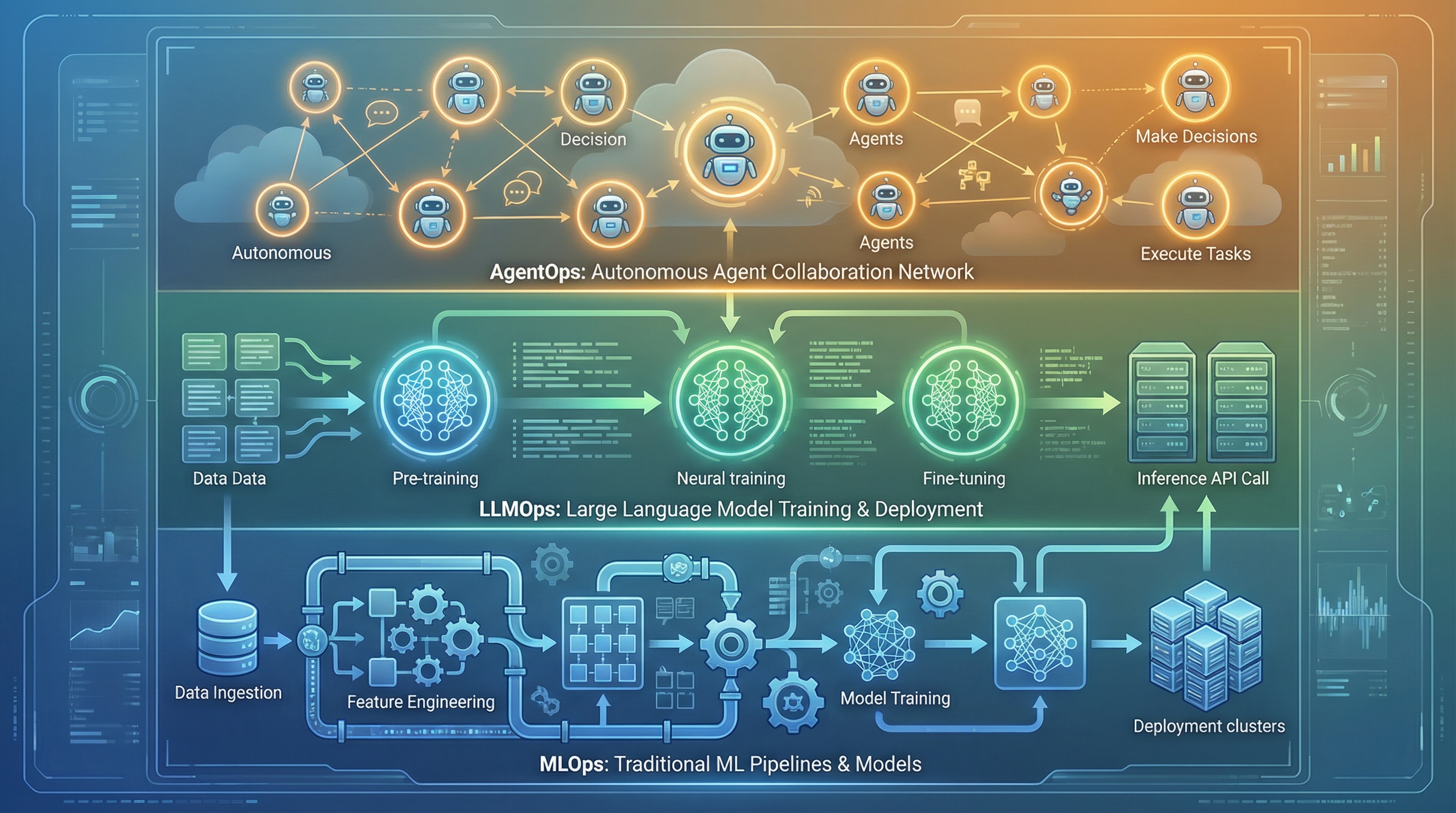

AI Agents as Web3 Primitives

The convergence extends beyond infrastructure. AI agents—autonomous software that analyzes data, makes decisions, executes actions—are becoming fundamental primitives in Web3.

The AI agent market within Web3 has exploded. Market capitalization passed $7.7 billion. Daily trading volumes approach $1.7 billion. These agents operate across multiple categories:

Trading Agents monitor token prices across chains, analyze market data, execute orders on decentralized exchanges. They use modular blockchain infrastructure to access real-time data from multiple chains simultaneously.

Governance Agents participate in DAO voting based on user preferences or custom logic. As DAOs grow more complex, these agents become essential for informed participation at scale.

DeFi Automation Agents rebalance liquidity, auto-compound rewards, execute sophisticated strategies across protocols. They show how AI and modular DeFi infrastructure can combine to create more efficient markets.

Game Economy Agents manage assets and strategies in on-chain games and metaverses, showing how AI can improve user experience in decentralized virtual worlds.

These agents need the low-latency, high-throughput infrastructure that modular blockchains provide. They also benefit from decentralized AI training, which lets them improve through collective learning rather than being controlled by single entities.

Real-World Implementations

The convergence is moving from theory to practice.

Theta Network’s EdgeCloud

Theta Network sits at the intersection of blockchain and AI. Their EdgeCloud platform uses distributed computing resources for AI workloads, with blockchain handling coordination and incentivization. This shows how modular thinking—separating compute provision from coordination and settlement—enables new AI capabilities.

Ethereum’s AI Roadmap

Vitalik Buterin outlined a 2026 roadmap for decentralized AI on Ethereum in January. It includes ERC-8004, which enables agentic AI interactions on the blockchain. This standard lets AI agents interact with smart contracts in sophisticated ways, conducting private, verifiable AI transactions with micropayments.

The modular Ethereum ecosystem, with its Layer 2 rollups and specialized execution environments, provides the infrastructure these AI agents need. High-frequency AI operations can happen on optimized rollups. Settlement and verification happen on Ethereum’s base layer.

The Artificial Superintelligence Alliance

The merger of Fetch.ai, SingularityNET, and Ocean Protocol into the Artificial Superintelligence Alliance is a bet on decentralized AI infrastructure. These projects provide different pieces—autonomous agents, AI marketplaces, data sharing protocols—all coordinated through blockchain technology.

Their architecture mirrors modular blockchain principles: specialized components handling specific functions, coordinated through shared protocols rather than centralized control.

What Could Go Wrong

Rapid progress doesn’t mean the problems are solved. Significant challenges remain.

Technical Hurdles

Communication Overhead: Projects have dramatically reduced bandwidth requirements for decentralized training. But it’s still orders of magnitude higher than centralized alternatives. Training the largest frontier models still needs data center-level connectivity.

Verification Complexity: Ensuring distributed participants are honestly contributing to AI training or executing inference correctly is computationally expensive. Current verification methods add 5-10% overhead. That compounds at scale.

Interoperability: As modular blockchains and decentralized AI networks proliferate, making them work together becomes harder. Standards for cross-chain AI agent interactions and shared training protocols are still emerging.

Economic Questions

Sustainability: Can decentralized AI training compete economically with centralized alternatives? Costs are falling, but tightly integrated data center infrastructure still has significant efficiency advantages.

Incentive Design: Creating incentive structures that reward honest participation while preventing gaming and collusion is hard. Bittensor subnets face constant cat-and-mouse games between validators trying to game the system and subnet creators trying to prevent it.

Value Capture: How should value be distributed among model designers, compute providers, data contributors, and users? Pluralis’s Protocol Models propose equity-like ownership, but implementation details are complex.

Regulatory Landscape

As AI capabilities grow more powerful and blockchain systems handle more value, regulatory scrutiny intensifies. Decentralized systems must navigate questions about liability, content moderation, and compliance while maintaining their permissionless nature.

What Happens Next

The convergence of modular blockchains and decentralized AI is a statement about how critical infrastructure should be built and controlled.

Near-Term (2026-2027)

Specialized AI Chains: We’ll see rollups and app-chains optimized specifically for AI workloads, with custom precompiles for machine learning operations and gas pricing models that reflect AI computation costs.

Cross-Chain AI Agents: As interoperability protocols mature, AI agents will operate seamlessly across multiple chains, aggregating data and executing strategies across the entire Web3 ecosystem.

Hybrid Training Models: Rather than purely centralized or decentralized, we’ll see hybrid approaches that use decentralized networks for specific training phases while using centralized infrastructure where it makes sense.

Medium-Term (2028-2030)

Fully Decentralized Frontier Models: As communication efficiency improves and verification becomes cheaper, training models competitive with GPT-4 and beyond in fully decentralized settings becomes feasible.

AI-Native Blockchain Protocols: New blockchain designs that incorporate AI agents as first-class citizens, with protocol-level support for AI verification, training coordination, and agent interactions.

Decentralized AI Marketplaces: Mature markets for AI services where anyone can contribute compute, data, or models, with blockchain handling coordination, payments, and quality assurance.

Long-Term Implications

The promise here is a Web3 ecosystem that is simultaneously more scalable, more intelligent, and more resistant to centralized control.

Picture this: Any developer can deploy a specialized blockchain optimized for their application in minutes, using shared security and data availability. AI models are trained collectively by global communities, with contributors owning stakes in the models they help create. Autonomous agents manage complex DeFi strategies, participate in governance, coordinate economic activity across chains. The infrastructure for both computation and intelligence is permissionless, verifiable, accessible to anyone.

This isn’t science fiction anymore. Projects like Nous Research, Prime Intellect, and Celestia are laying the technical foundations today. Economic models are being tested in live networks like Bittensor. AI agents are already managing billions in value.

Conclusion

Modular blockchains and decentralized AI address the two biggest limitations that have held back Web3: scalability and intelligence. Modular architectures solve the scalability trilemma by allowing specialization and optimization at each layer. Decentralized AI breaks the monopoly on artificial intelligence, making advanced capabilities accessible to anyone with compute to contribute.

Together, these technologies create a foundation for Web3 that can actually deliver on its promises. A decentralized internet that’s fast enough for mainstream applications, intelligent enough to provide sophisticated services, open enough that anyone can participate and benefit.

The road ahead is challenging. Technical hurdles must be overcome. Economic models need refinement. Regulatory questions need answers. But the progress over the past two years has been remarkable. Training billion-parameter models across continents seemed impossible. Processing thousands of transactions per second on decentralized infrastructure seemed impossible. Both are now routine.

The question isn’t whether modular blockchains and decentralized AI will reshape Web3. It’s how quickly this transformation will happen and what new possibilities it will unlock. For developers, investors, and users willing to engage with these technologies today, the timing is right. The infrastructure is being built now, one modular layer and one decentralized training run at a time.